Bilge Mutlu

Computer Science, Psychology & Industrial Engineering

University of Wisconsin–Madison

I am a Discovery Fellow at the Wisconsin Institute for Discovery, director of the People and Robots Laboratory, and director of the NSF Research Traineeship program INTEGRATE. My research in human-robot interaction builds human-centered principles and methods to enable effective, intuitive interactions between people and robotic technologies. I received my PhD from Carnegie Mellon University's HCII in 2009.

If you are a UW faculty member, staff, or student interested in shaping the future of embodied AI, let's talk about IDEA.

Building better human-robot interactions

My research takes a design-led approach to human-robot interaction (HRI), building human-centered principles, methods, and tools that enable effective, intuitive interactions between people and robotic technologies and facilitate the successful integration of these technologies into human environments. Highlights from the People and Robots Lab:

Long-term, Real-World HRI

Building autonomous systems that can navigate complex social dynamics in the physical world, including long-term human-robot interaction in homes, privacy challenges in complex domestic environments, and supporting learning and education through social agents.

Human-Robot Collaboration

Building human-in-the-loop interfaces for robots that augment human capabilities while retaining human expertise, autonomy, and trust, along with natural-language-based interfaces and programming tools for collaborative robotics.

About This Research

A central challenge in human-robot interaction is building autonomous systems that can navigate the complex, unpredictable social dynamics of everyday life. Our work deploys robots into homes, schools, and community settings to study how people form relationships with social agents over weeks and months—not just minutes in a lab.

We investigate how robots can support children's learning as reading and educational companions, how families negotiate privacy when a robot becomes part of the household, and how long-term interaction reveals design opportunities that short-term studies miss.

Selected Papers

- Wright, Vegesna, Michaelis, Mutlu, & Sebo. "Robotic reading companions can mitigate oral reading anxiety in children." Science Robotics, 2025

- Ho, Kargeti, Liu, & Mutlu. "SET-PAiREd: Designing for parental involvement in learning with an AI-assisted educational robot." CHI 2025

- Michaelis, Cagiltay, Ibtasar, & Mutlu. "'Off Script': Design opportunities emerging from long-term social robot interactions in-the-wild." HRI 2023

- Lee, Cagiltay, & Mutlu. "The unboxing experience: Exploration and design of initial interactions between children and social robots." CHI 2022 🏅

About This Research

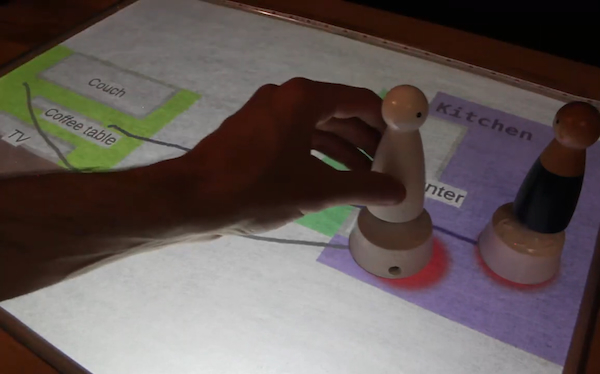

As robots move into workplaces alongside people, a key question is how to augment human capabilities without displacing human expertise and judgment. Our work develops shared-control systems and human-in-the-loop interfaces that let operators leverage robotic precision while retaining autonomy over critical decisions.

We build natural-language-based interfaces and end-user programming tools that make collaborative robotics accessible to non-experts—enabling workers to author, customize, and oversee robot behaviors in real time.

Selected Papers

- Hagenow, Senft, Radwin, Gleicher, Zinn, & Mutlu. "A system for human-robot teaming through end-user programming and shared autonomy." HRI 2024

- Senft, Hagenow, Radwin, Zinn, Gleicher, & Mutlu. "Situated live programming for human-robot collaboration." UIST 2021

- Senft, Hagenow, Welsh, Radwin, Zinn, Gleicher, & Mutlu. "Task-level authoring for remote robot teleoperation." Frontiers in Robotics and AI, 2021

- Rakita, Mutlu, Gleicher, & Hiatt. "Shared control–based bimanual robot manipulation." Science Robotics, 2019

Robot Design & Programming Tools

Building a suite of tools to support the unique and complex task of designing robotics applications for human use, integrating how designers and developers work with formal methods for software analysis, verification, and synthesis, multimodal and multiagent LLMs, and expert critics.

Assistive & Care Robotics

Developing care robotics that empowers both caregivers and care recipients, research on human-AI and human-robot interaction for people with Down syndrome, and collaborative work supporting the participation of older adults and people who are blind or have low vision in the broader social and physical world.

About This Research

Designing robot behaviors for human environments is a uniquely complex task that sits at the intersection of interaction design, software engineering, and AI. Our work builds tools that bridge this gap—integrating the ways designers and developers work with formal methods for software analysis, verification, and synthesis.

Recent efforts incorporate multimodal and multiagent LLMs as expert critics that can evaluate, refine, and verify robot interaction designs. By combining human-centered design processes with rigorous technical methods, we aim to make it possible to author robot behaviors that are not only expressive and intuitive but also provably correct and safe.

Selected Papers

- Lee, Porfirio, Wang, Zhao, & Mutlu. "VeriPlan: Integrating formal verification and LLMs into end-user planning." CHI 2025

- Schoen, Sullivan, Zhang, Rakita, & Mutlu. "Lively: Enabling multimodal, lifelike, and extensible real-time robot motion." HRI 2023 🏆

- Porfirio, Stegner, Cakmak, Sauppé, Albarghouthi, & Mutlu. "Sketching robot programs on the fly." HRI 2023

- Porfirio, Sauppé, Albarghouthi, & Mutlu. "Authoring and verifying human-robot interactions." UIST 2018 🏆

About This Research

Our assistive robotics research is grounded in the belief that technology should empower people—not replace the human relationships at the center of care. We develop robots and AI systems that support both caregivers and care recipients, designing with and for the communities we aim to serve.

This includes research on human-AI and human-robot interaction for people with Down syndrome, conversational telepresence robots for homebound older adults, participatory design methods for aging populations, and robotic systems that support the social and physical participation of people who are blind or have low vision.

Selected Papers

- Hu, Kuribayashi, Wang, Kayukawa, Sato, Mutlu, Takagi, et al. "Robot-assisted group tours for blind people." CHI 2026

- Hu, Stegner, Kotturi, Zhang, Peng, Huq, Zhao, Bigham, et al. "'This really lets us see the entire world': Designing a conversational telepresence robot for homebound older adults." DIS 2024

- Johnson, Sterling, & Mutlu. "'It is easy using my apps': Understanding technology use and needs of adults with Down syndrome." CHI 2024

- Stegner, Senft, & Mutlu. "Situated participatory design: A method for in situ design of robotic interaction with older adults." CHI 2023

Courses

People

Current Advisees

Past Advisees

Interested in joining the People and Robots Lab? Please read this page.

Recognitions & Paper Awards

Selected Recognitions

Selected Paper Awards

Our work has received nearly 30 awards and nominations, including 1 ten-year impact award, 11 best paper awards, 7 honorable mentions, and 3 poster awards.